There are many occasions in your ETL development process in which you need to work with files from an HTTP service endpoint and you are tasked to consume the data with the files. Such files can be a JSON file, an XML file, an EDI file, or a flat file (such as the CSV format). Traditionally, such file data consumption requires you to first download the file(s) from the endpoint before you can process the data contained within them. This generally requires two control flow and data flow tasks, with the first one to download the file(s) from the service endpoint that saves them to a staging location in the local file system, and then use another data flow to read and process the file contents. In today's blog post, we will discuss an alternative approach, in which you don't have to save the files locally, but instead, the file content is saved in your ETL process' memory, which you can consume the in-memory data directly in your next data flow task. Such in-memory file handlings provide some unique advantages, including package or solution transportability (easily move to another environment with restrictive local access), simplified data flow design, and the ability to manage it all within SSIS infrastructure. This also opens up the possibility to easily switch to a different SSIS runtime environment when the needs arise, thanks to the elimination of the dependency on local file storage. Let's take an example, where a set of JSON files from an HTTP web service endpoint, needs to be downloaded and processed in order to extract data from within them. This can be easily achieved using the full suite of KingswaySoft SSIS components made available in our SSIS Productivity Pack product. To be more specific, we will be using the HTTP Requester Task/Component to download files from custom URLs or endpoints. To parse and process JSON files, we use the JSON Source component as well. The combination of the two components can be used to achieve both scenarios including downloading and physically saving the file, or otherwise the file can be downloaded and saved in memory without the dependency on local file storage before processing.

Now, let's show how it can be achieved. For our demonstration, the below components will be used:

- HTTP Requester Task

- JSON Source component

- Dummy Destination (which can be substituted with pretty much any other Destination component that you wish to write to)

It is important to mention, that the same in-memory file processing capabilities are also offered in some of the other SSIS data flow components offered in the SSIS Productivity Pack including.

With that in mind, let's get started.

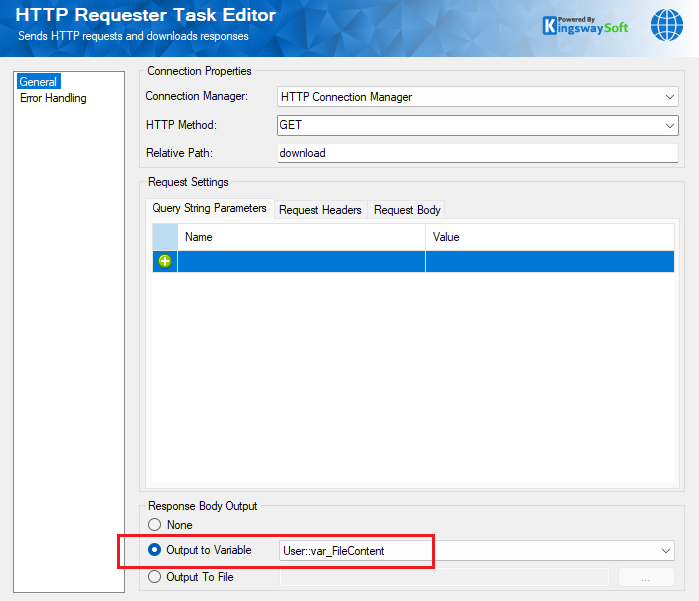

Configuring the HTTP Requester Task

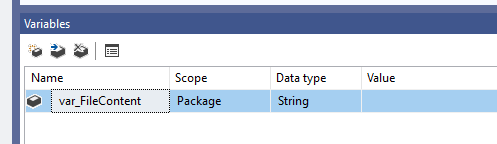

The HTTP Requester Task is a control flow task component that can be used to send an HTTP request and receive a response, typically used to download files. Once you configure and select the HTTP connection manager (Online Help Manual link here for more information), you can enter the other details such as the HTTP method, relative path, any query string parameters (if any), etc. As we are looking to process the file in memory, an important decision will have to be made with the Response Body Output option on the screen. To achieve this, we will be choosing the Output to Variable option, which allows us to pick a String or an Object variable from the list of available variables or parameters in the package/project scope. Note that in our case, the file content to be downloaded is in JSON format, and hence we choose a String variable that we have created in advance. If in other cases you wish to download a binary object, then an Object variable can be chosen.

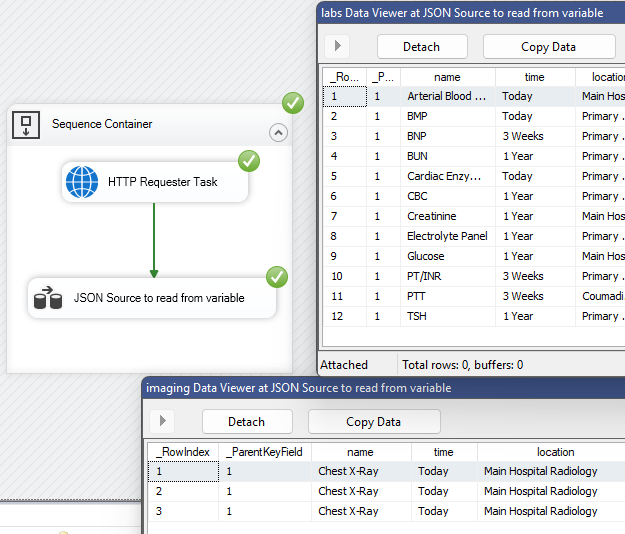

Once this is done, save and close the task, and then, drag and drop a new Data Flow Task, and connect the HTTP Requester Task to it (The overall design can be viewed in a later section). And within that Data Flow Task, put a JSON Source component, and let's see how this can be designed.

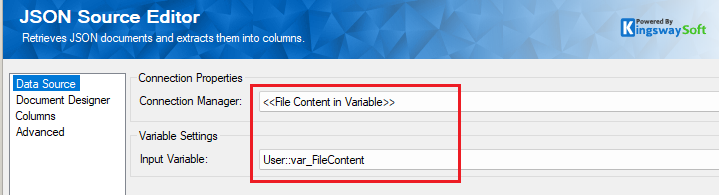

Configuring the JSON Source component

The JSON Source component supports a source type called <<File Content in Variable>> and this can be used to reference a variable instead of a local file as its Source to read data from.

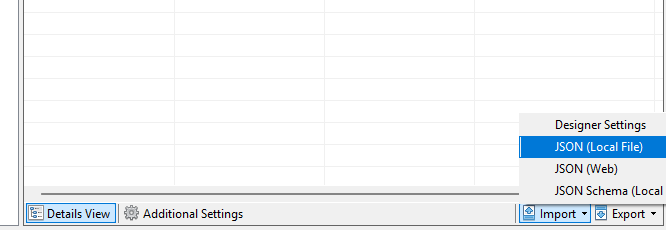

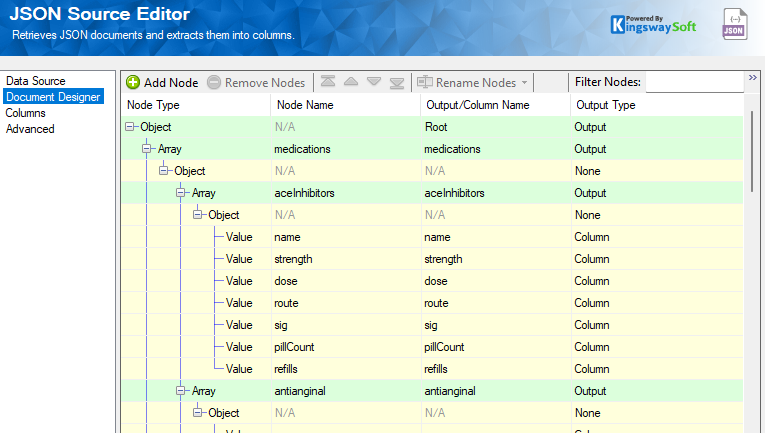

Once we have the connection manager specified properly, we can then move on to select the variable that has been specified as the output for the HTTP Requester Task. Now, in order to parse the values out correctly as the variable gets populated with the JSON body at runtime, we would need to predefine the document design in the JSON Source component. This can be done by using the Import options on the Document Designer page.

At design time, you could create a local file, and import it to generate the document designer. You can also import a file from an HTTP service endpoint directly. Once the import is done, the component should populate the document design accordingly with all columns and outputs generated as required, those will become automatically available to the SSIS pipeline, which can be then mapped to your Destination components in the same data flow. To know more about how the JSON Source document design metadata works, please refer to our Online Help Manual here.

Once we have the above two configured, we are ready to run our package. Note that the HTTP Requester, and the Data Flow Task that contains the JSON Source flow, need to be connected in series. Then, as you can see at runtime, the HTTP Requester task assigns the response body from the downloaded file to the variable, which in turn gets passed onto the JSON Source component, parsing out the values as per the document designer.

Note that the documentation designer for the JSON Source component decides how the file is parsed. You have all the options in our JSON source to fine-tune the metadata of the ETL process to make it best fit your business requirements.

Conclusion

Using a simple design such as the above, you can easily handle in-memory file downloads and reading, avoiding the dependency on local file storage. This simplifies your package design and removes the cluttering of your ETL process. The above example works for our JSON source component, and the same strategy can also be used for other data-related components, including CSV, and XML files in our XML Source Component and Premium Flat File Source Component as mentioned previously.

We hope this has helped!