Dynamics 365 Customer Insights Journeys is an incredibly important tool for businesses as it can provide insight and help visualize the journey that customers take when engaging with your products or services. The data can be used to help you improve how your business engages with customers and enable a better understanding of the journey life cycle. Due to the inherent value of Dynamics 365 Customer Insights Journeys data, you may need to work with the Customer Insights Journeys data in your data integration solutions. With our most recent v25.1 release, this can be easily done with our Premium Data File Source component via the recently introduced OneLake Connection Manager in the mentioned release. Thanks to the new capability introduced in the new release, Dynamics 365 Customer Insights Journeys data can now be effortlessly integrated with Microsoft Fabric, which is Microsoft's cloud data analytics platform and is meant to act as a unifying platform for various Microsoft Services such as Power BI, Azure Synapse, OneLake, etc. A big advantage of Microsoft Fabric is, that it allows simplified data management and seamless access to our data within a unified data platform that uses OneLake as the underlying data infrastructure. With such background in mind, our objectives of extracting Dynamics 365 Customer Insights Journey data in an SSIS data flow can be achieved using the following two components shipped within our SSIS Productivity Pack.

In this blog post, we will be taking a deep dive into how this can be achieved.

Integrating Customer Insights Journeys with Fabric

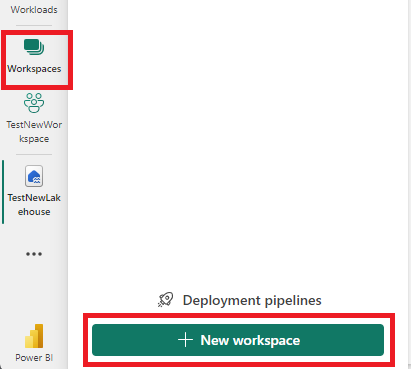

To start, you will need to have a Workspace and Lakehouse instance within your Microsoft Fabric. Workspaces are Microsoft Fabric containers that can contain items such as Lakehouses and reports. A Lakehouse is a data architecture platform that is used for storing and managing unstructured or structured data in a single location. With Lakehouses you can also create shortcuts to various data sources that you might have. Shortcuts are a useful data ingestion method that allows you to reference the Source data without needing to copy the actual data over. When doing so with Dynamics 365 Customer Insights data, this not only allows you to avoid moving data outside of your Dataverse instance but also will make the data available in real-time within Microsoft Fabric. This will allow you to integrate the journey's data into Fabric, which then be read with KingswaySoft components. You can either create a new Workspace or work with an existing one. Afterward, you can create or open a Lakehouse, and you can choose Get data, select New shortcut, and finally select Dataverse as the Source type.

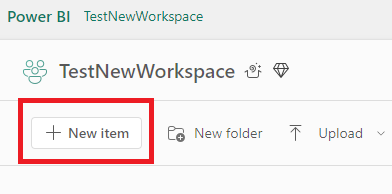

When creating a new shortcut, you have the option to either create a new connection or use an existing connection. If you are creating a new connection you can then give your Dataverse environment URL and credentials. Then afterwards you can select the Customer Insights Journey tables you wish to read. In this example, we want to find the Emails that were sent.

Setting up OneLake Connection

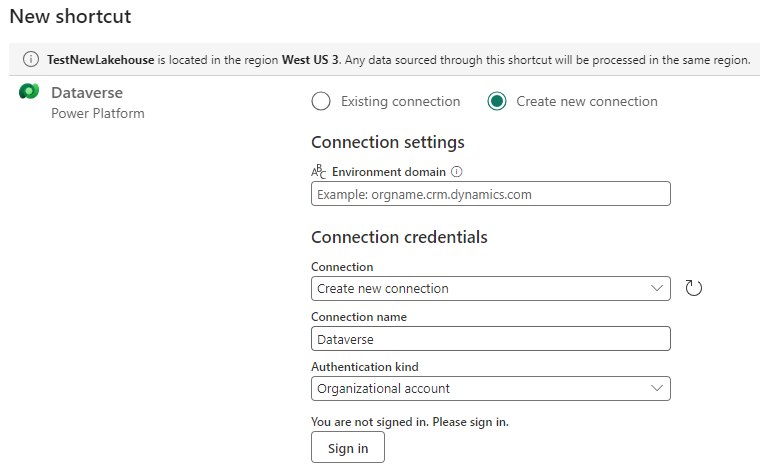

Once we have made the Journey's data available from within Fabric, we can proceed to set up ETL processes to read the data using SSIS packages as required by the business. Since Microsoft Fabric uses Onelake as the underlying data storage, we will be accessing Microsoft Fabric data with our newly introduced OneLake Connection Manager. To do this we will need to make sure we have a registered Azure app that will provide us the necessary Client App Id, and Client Secret. First, we will log into Azure Portal and navigate to Microsoft Entra Id.

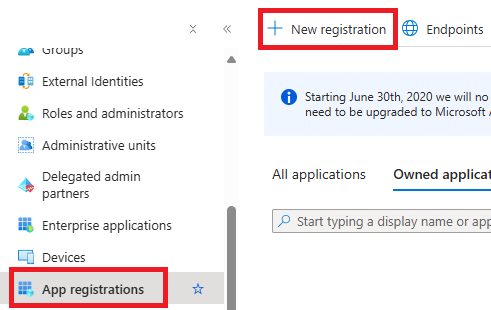

Then on the left pane, click on “App registrations”, then select “New registration”.

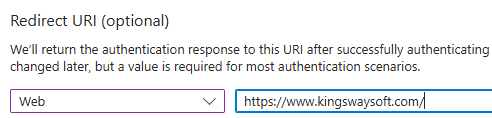

Once on the Registration page, we will need to provide a Redirect URI. For the Redirect URI, you can supply any valid HTTP URL that does not perform any redirects. Generally speaking, you should be using a URL that you can trust. In our demonstration, we use our website URL: https://www.kingswaysoft.com/

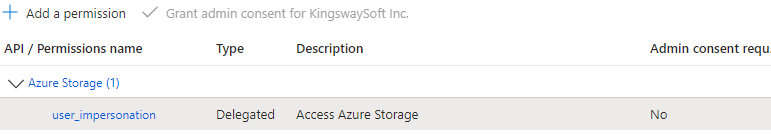

After providing a redirect URL we will add the necessary permissions for the app. First, click on “API Permissions”, click on “Add a permission” and select Azure Storage which will grant you access to OneLake.

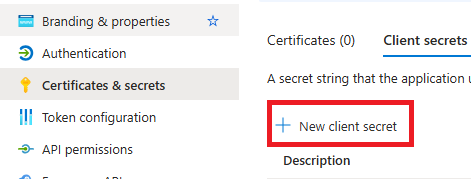

You can then secure your app by creating a Client Secret. To do so, you would first go to “Certification & secrets” in the left navigation and then click “New Client Secret“. Please note that the Client Secret is available to view and copy only at the time when it is created. If you forget to copy it at the time of creation, there is no way to reveal the full client secret. In such cases, all you can do is to create a new client secret and you may remove the unrecoverable client secret if you know it is not used elsewhere.

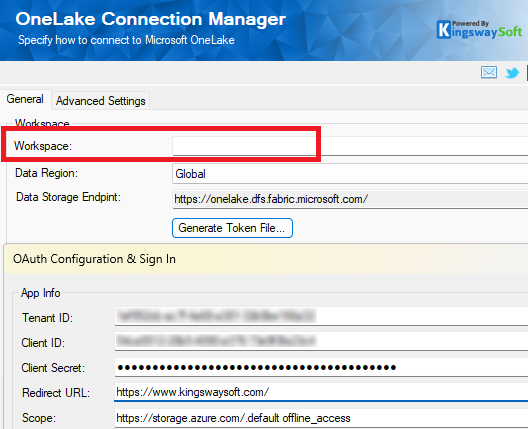

Now that you have a registered app you can go to your SSIS development environment to start configuring the OneLake Connection Manager. You will need to enter the name of the Workspace that you used for integrating Journeys Fabric. Then you will need to generate a new token file if you do not already have one. You can click on the "Generate Token File", which will bring up the OAuth token generator. You can then enter the details that were previously generated in your Azure app. Once you press OK, it will take you to your authorization portal, to which you can enter your user and password details.

Once authorized, you will see that the browse redirects to the redirect URI that was specified in the Azure portal app registration, and appended to it, would be the code. Copy the whole URL, and paste it on the window that had opened up in SSDT. Afterwards, the component will generate a token file which will be saved on your machine; you can encrypt it using an alphanumeric password. You can then click on Test Connection to confirm the connection works.

Reading Customer Insights Journey Data

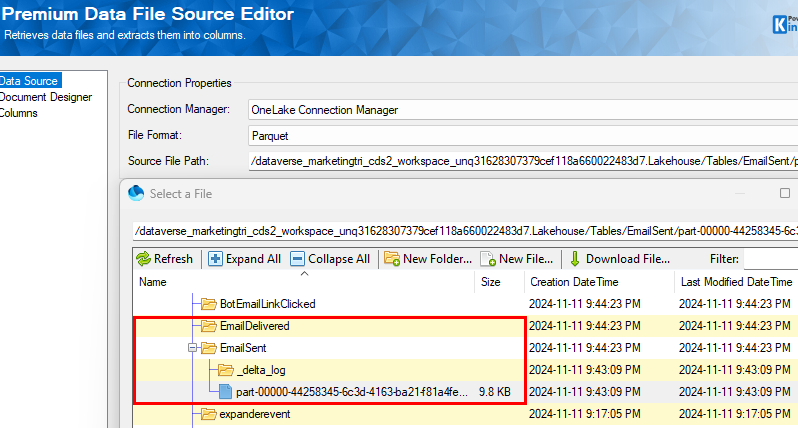

Now that we have the OneLake Connection Manager configured, we are ready to get to our final step which is to read and consume Customer Insights Journey data in the SSIS data flow. For clarity purposes, the journey’s data is populated as tables in Microsoft Fabric, the data is actually stored in a parquet file format. With this objective in mind, we will resort to our Premium Data File Source component which has support for reading data from parquet files that reside on either a local file system or a cloud storage location such as Microsoft OneLake. We will drag and drop a Premium Data File Source component from the SSIS Toolbox to the data flow. We can then configure the component to use the OneLake Connection Manager we just created and set the component's File Format option to Parquet. After the Connection Manager and file format have been configured, we should be able to browse the structure of our OneLake catalog. In the Source file path field, we can click on the ellipsis which will open up a file explorer. There we can find our Customer Insights Journey data file available. In our example, we want to read all emails we have sent, as such we will select the Email Sent analytics data.

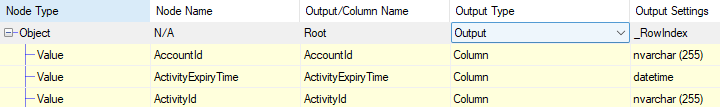

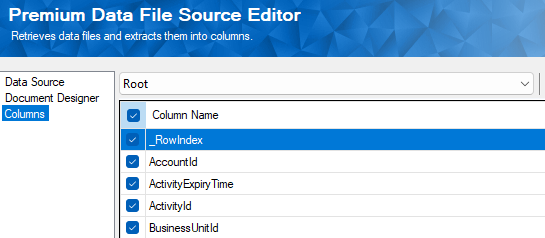

After selecting the analytics data the component should automatically import the metadata of the parquet file. This might be a good time to have a quick review of the metadata to make sure that they are accurate. On the Document Designer page, you should be able to see the list of all available nodes present in the parquet file. You can make adjustments to the Document Designer depending on your needs, such as adjusting the output settings, name, type, etc.

Any changes that you make to the Document Designer page should immediately be reflected in the Columns tab.

Once you have everything configured, you can click the OK button to accept the configuration and design. Now, you can connect the source component to a downstream component to facilitate the data extraction and movement. When executed, your Dynamics 365 Customer Insights journey data serves as the data source of your data flow task by feeding the data to the downstream pipeline components, in order to achieve your integration needs.

Conclusion

In this blog post, we have discussed how to set up an Azure app for connecting to OneLake, integrating Customer Insights Journeys data that resides in Microsoft Fabric, this is accomplished in the SSIS ETL process. As you can see in the process above, you can easily achieve the purposes with maximum efficiency by utilizing relevant KingswaySoft components. We hope this blog post is able to provide some helpful insights on how to achieve the goals of extracting Dynamics 365 Customer Insights Journey data efficiently.