SAP Concur is a widely popular service for travel, expense, and invoice management, with many use cases in which you would need to read data from or write data to Concur to facilitate some business process automation. In doing so, you would essentially work with SAP Concur API to extract or write data using HTTP-based requests and responses. To initiate such API service calls, you would have to properly construct the requests in your ETL process. The requests can vary based on the type of action you wish to perform, and the construction of service calls will be dynamic depending on the service call and all the necessary input data. From a business perspective, one helpful use case would be to find and close batches based on processed batch numbers, and subsequently, download a set of report files and store the data in a database system. Now, this not only contains sending out a bunch of requests, but also would need to reference back and forth for values, and parse out details from within the responses, then read the files, and eventually archive them. We will show you how these can be done easily and in an automated way using KingswaySoft components.

For the use case that we are discussing in this blog post, we will be using the following KingswaySoft components (which can be found as a part of our SSIS Productivity Pack):

- HTTP Connection Manager

- JSON Source component

- Premium Derived Column component

- HTTP Requester component

- Premium Flat File Source component

- Premium ADO.NET component

And the below out of the box components

- Conditional Split component

- Recordset Destination component

Package Design

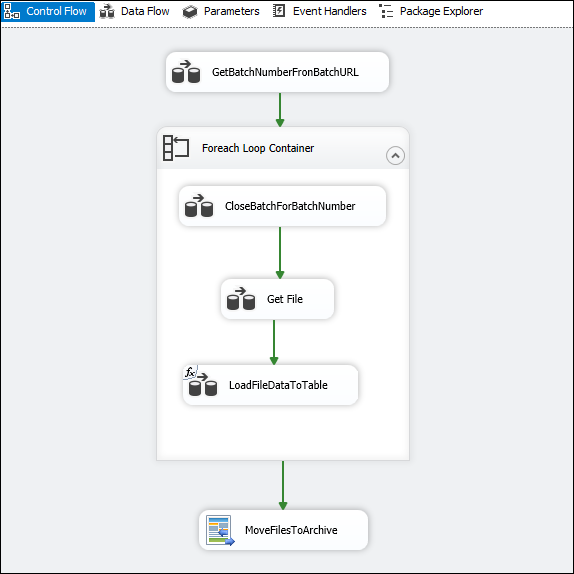

The overall design would be something as shown below. The data flow, GetBatchNumberFromBatchURL, makes a service call to SAP Concur to retrieve all Batch numbers from the Concur expense service endpoint. Inside the <i>foreach</i> loop container, the data flow CloseBatchForBatchNumber closes each batch by looping through the batch numbers received from the initial data flow task. And the Get File data flow would download the report (file), which is then parsed and stored in a database using the LoadFileDataToTable data flow. Finally, once the loop ends, the Premium File Transfer Task archives the files to a location.

Now let's break down each task to show how they work internally.

Data flow - GetBatchNumberFromBatchURL

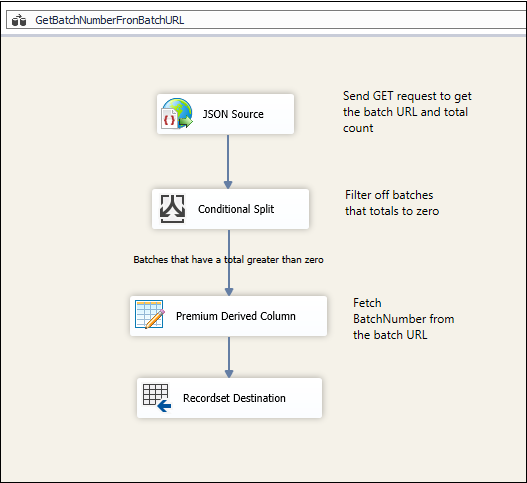

In this data flow, a JSON Source component is used to send a GET request to the expense/payment endpoint in Concur API. Please note that a HTTP Connection manager is used to authenticate to the API, and you can find more details on how to work with authentication and OAuth2 in another one of our blog posts (link here). Now, the overall design of this data flow would look like the one below:

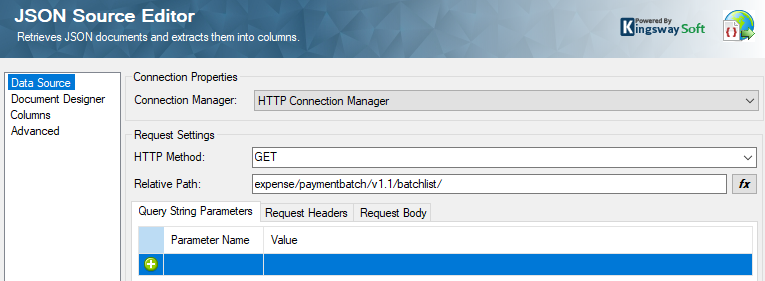

In the JSON Source component, The HTTP method has been chosen as GET, and we have provided the relative path for the <i>paymentbatch</i> endpoint.

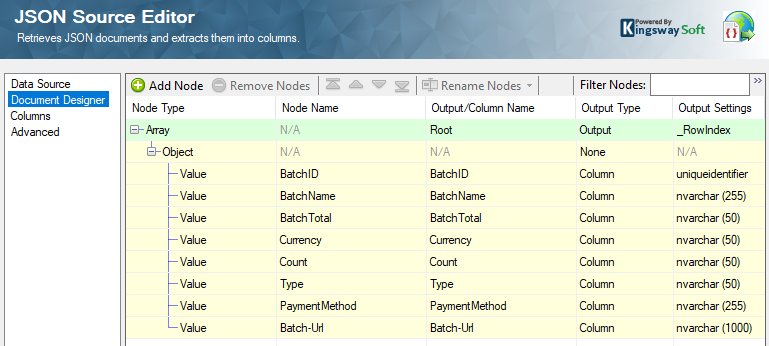

Please note that if you have more than one page of batches, you could set up the "request again rule" that we have in our JSON Source component to paginate to other pages as well. We have a blog post that explains about pagination, which you can find here. Now, once you have the general page configured, you would need to get the output columns configured. In order to do that, the document design for the response would need to be imported into the "Document Designer" page.

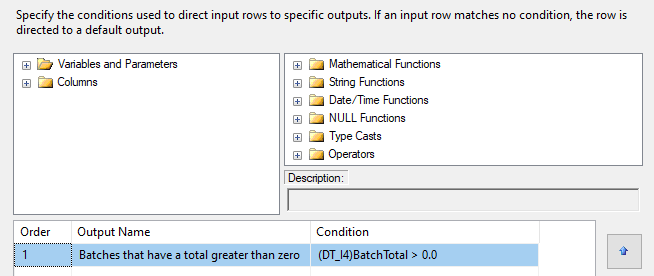

As you can see, there is batch information like Batch-URL, BatchTotal, etc., which we will use in the downstream processing. The BatchTotal decides whether a batch is valid or not. Therefore, we would need to take only those batches that have a total greater than zero, and to do this, we would use a conditional split component.

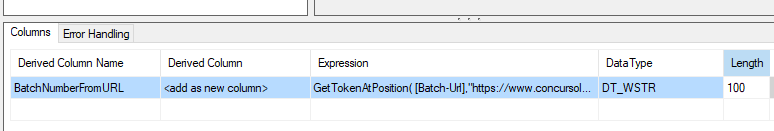

The output from here would be passed to a Premium Derived Column Component, in which you could provide an expression to get the BatchNumber out of the Batch-Url (in the response).

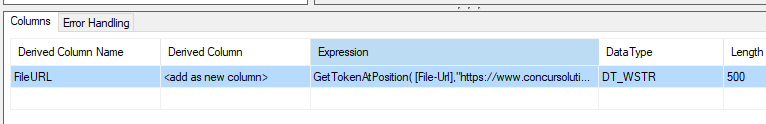

The following expression is what you would be using in this case: GetTokenAtPosition( [Batch-Url],"https://www.concursolutions.com:443/api/expense/paymentbatch/v1.1/batch/" ,2 )

What this does is, it splits the URL into two parts, and gets the second part, which is the batch number that is concatenated at the end of the URL. Once you have the batch number, you would need to save it to an object variable, @[User::Recordset]. We can do this by using a RecordSet Destination component. Once we have this setup, we move on to the next part.

Data Flow - CloseBatchForBatchNumber

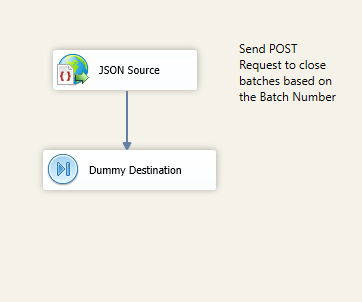

Drag and drop a foreach loop container, and connect it in series to the previous data flow (GetBatchNumberFromBatchURL), and inside this, we build the current data flow. Inside this data flow task, the design would look as shown below.

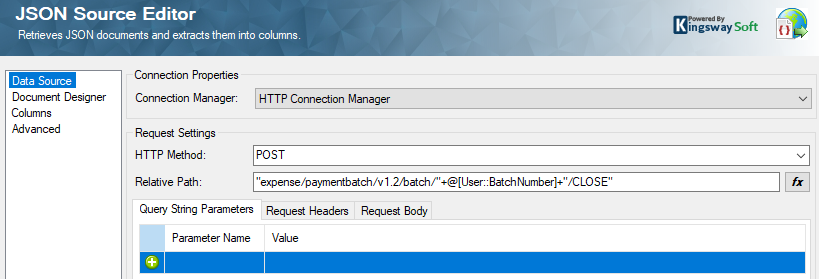

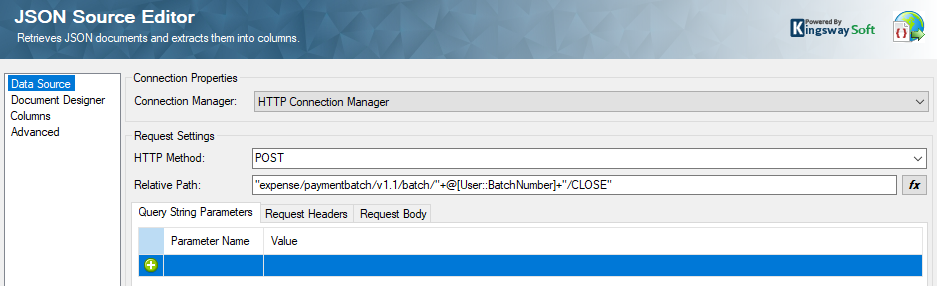

The JSON Source component is used to send a POST request to close the batches, based on the batch numbers that we received and saved in the previous data flow. The trick here would be to pass the batch numbers one by one to the JSON Source. We do this by using the foreach loop container, parsing through the object variable @[User::Recordset] and assigning the values in each iteration to a string variable @[User::BatchNumber]. In the JSON Source, we receive the values by parameterizing the component to accept the variable @[User::BatchNumber] in the relative path, as shown below.

Since this is a Source component, it requires a Destination in SSIS, so we need to provide a Dummy Destination component in the downstream data flow. Now we move on to the next part.

Data flow - Get File

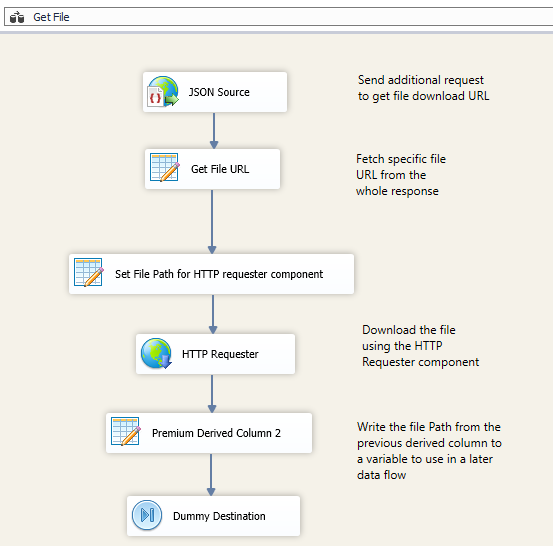

At this point, Concur API requires an additional POST request to be sent, very similar to the Close Batch request, but with slight modifications to download the report file. In order to do that, once again we use a JSON Source inside the same foreach loop (since we need to do this for each batch number) and then receive a file URL in the response, which we use to download the file. The design would look as the one below.

The JSON Source relative path for this data flow would look like the one below. You would notice that it looks similar to closing a batch, but the relative path is slightly different. The reason for this is due to how the API endpoint requires an additional request to get the file URL.

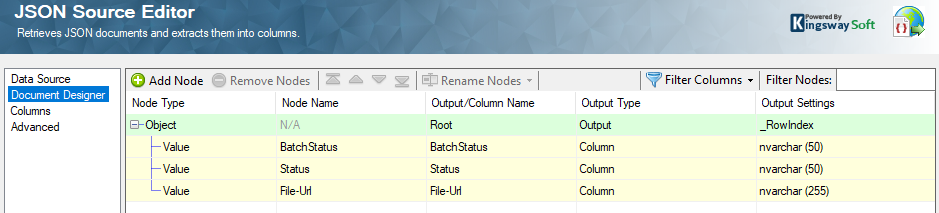

Once we have the relative path set up, we can set the document designer, which would parse out the response.

With this designed, we now add a Premium Derived Column Component, in which we provide a similar expression to get the specific File URL parsed out from the "File-Url" node received, as this contains some additional values in it.

Now, use the same Premium Derived Column Component, or another one, to set a path for the file to be saved. We do this because, with the FileURL that we have fetched out, we will be sending a file download request to the API using an HTTP Requester component for which a file download path and a file name are required. In our example, we are planning to create files and suffix a <i>datetime</i> tag to them. Therefore, we use the below expression in the new Derived column called "FilePath".

"D:\\MyFiles\\Source\\Result_"+DateTimeToString(GetDate(), "yyyy_MM_dd_hh_mm_ss" )+".txt"

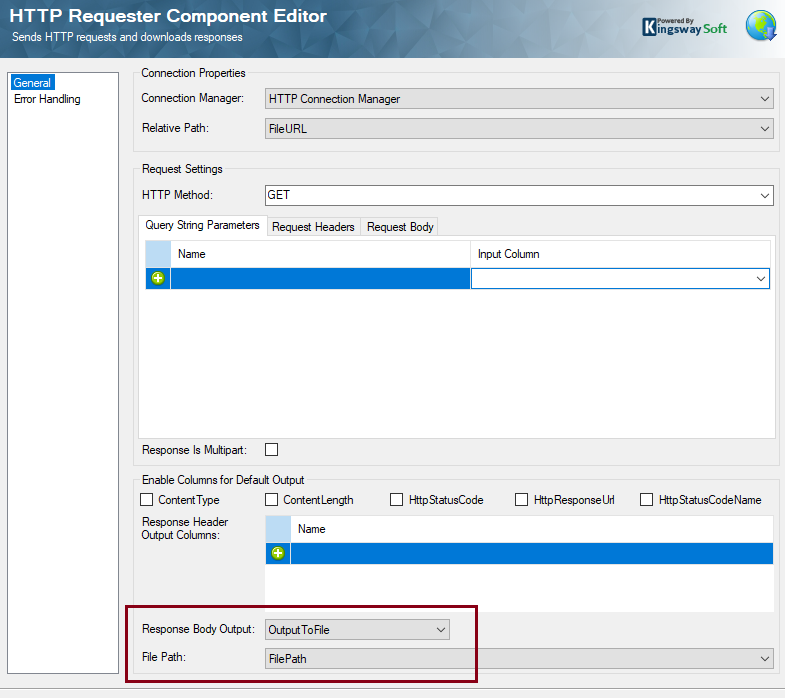

Once we have that, we drag and drop an HTTP Requester component and configure it as shown below.

As you can see, the Relative Path is set as the FileURL which we had formed in the Derived Column, and the connection manager remains the same as below. This is where a relative path comes in handy, as you could use the same connection manager with the Base URL, which the relative path can change in each of the components that we use. Now, since our use case here has another task, which is to read each file and write the records to a database table, we would just add another Derived column to write the FilePath to a variable @[User::FilePathVar]. The expression below can be used for it.

WriteValueToVariable( @[User::FilePathVar], [FilePath] )

We close the data flow design with a Dummy Destination and move on to the next one.

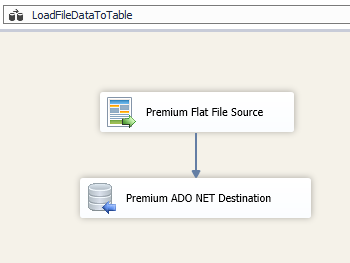

Data Flow - LoadFileDataToTable

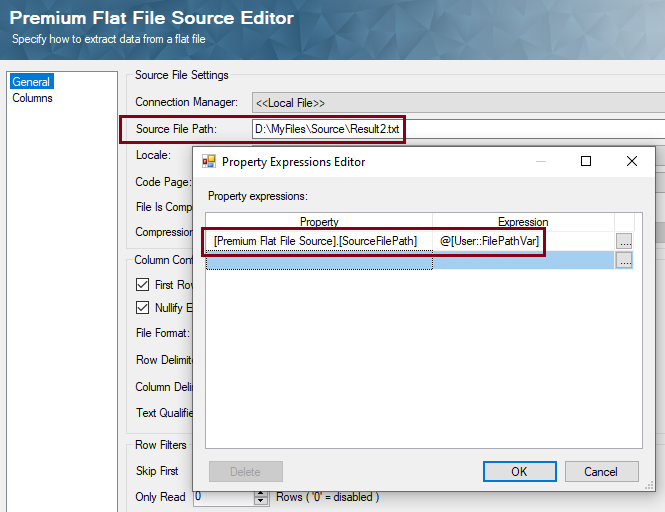

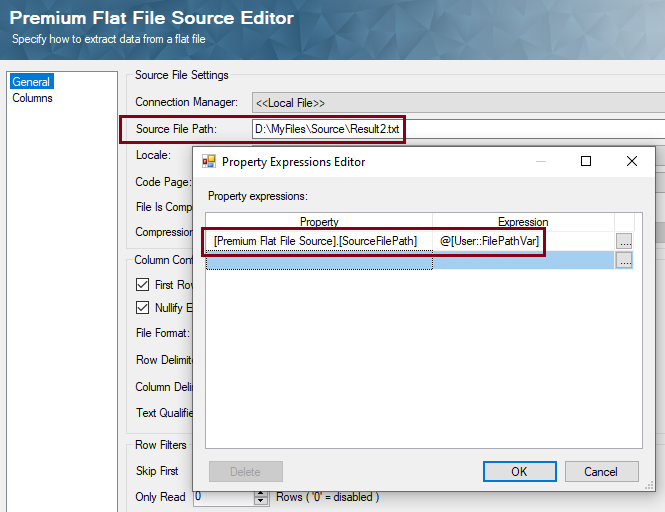

Here, we use a parameterized Premium Flat File Source component, where the Source File Path property is parameterized to accept the variable @[User::FilePathVar]

The parameterization can be done by clicking on the "fx" button which opens up the property expression editor. Note that the variable contains a dummy value, which is for initialization to not have any validation error. And at runtime, it picks up the actual expression values.

A Premium ADO.NET Destination component is used to write to your desired database table. Once we have configured the above, we move out of the <i>foreach</i> loop container, and then drag and drop a Premium File Transfer Task which will move the set of files created to an archive location.

Note that this is outside the <i>foreach</i> loop because we need to wait until all the files have been downloaded and written to the database table, for all the batch numbers that are fetched.

Conclusion

We hope that the above use case has served as an example of how to work the logic pertaining to using Concur API and other similar ones. Depending on which endpoint you wish to work with, you can add or remove data flows to and from the loop container and make changes in the design to alter the logic to suit the requirement. A sample package that we have used above can be downloaded here, which you could open to find the component configurations in more detail. Please note that the sample package is just for reference purposes, and we suggest you closely review the design to suit your needs if you would like to put this into production use. We hope this has helped.